In the heart of Paris, a beacon of innovation in artificial intelligence (AI) shines brightly with the emergence of Mistral AI. This ambitious startup is swiftly carving out its niche, positioning itself as a formidable contender against giants like OpenAI and Anthropic. With the unveiling of Mistral Large, its flagship language model, Mistral AI is not just entering the arena; it’s setting the stage for a revolution in AI reasoning capabilities, directly challenging leading models such as GPT-4 and Claude 2.

Introducing Mistral Large and Le Chat: Pioneering AI Solutions

Mistral AI’s launch of Mistral Large marks a significant milestone in the evolution of large language models. Designed with advanced reasoning capabilities, Mistral Large aims to rival and surpass the performance of established models. Complementing this, Mistral AI introduces Le Chat, a versatile chat assistant now available in beta, offering a fresh alternative to ChatGPT that promises enhanced user interactions through AI.

You can access Le Chat by creating a new free account by using this link: Le Chat

A Rapid Ascent: Mistral AI’s Financial Journey

Since its inception in May 2023, Mistral AI has captured the attention of the tech and investment communities alike. The company’s impressive trajectory began with a staggering $113 million seed funding round, quickly followed by a $415 million funding round led by Andreessen Horowitz (a16z). These financial milestones underscore the market’s confidence in Mistral AI’s vision and the potential impact of its technology.

Open Source Foundations with a Strategic Shift

Initially, Mistral AI championed an open-source approach, reminiscent of its founders’ roots in Google’s DeepMind and Meta. This ethos was evident in its first model, which was released under an open-source license. However, as the company evolves, its strategy appears to be aligning more closely with OpenAI’s, particularly in offering Mistral Large through a paid API, indicating a nuanced shift in its business model towards usage-based pricing.

Competitive Pricing and Language Support

Mistral AI not only stands out for its technological innovations but also for its competitive pricing strategy. With rates significantly lower than those of GPT-4, Mistral Large offers an affordable yet powerful alternative for processing both input and output tokens. Additionally, its support for multiple languages, including English, French, Spanish, German, and Italian, amplifies its appeal on the global stage.

Read More: How to Easily Run Google Gemma Model Locally: A Step-by-Step Guide

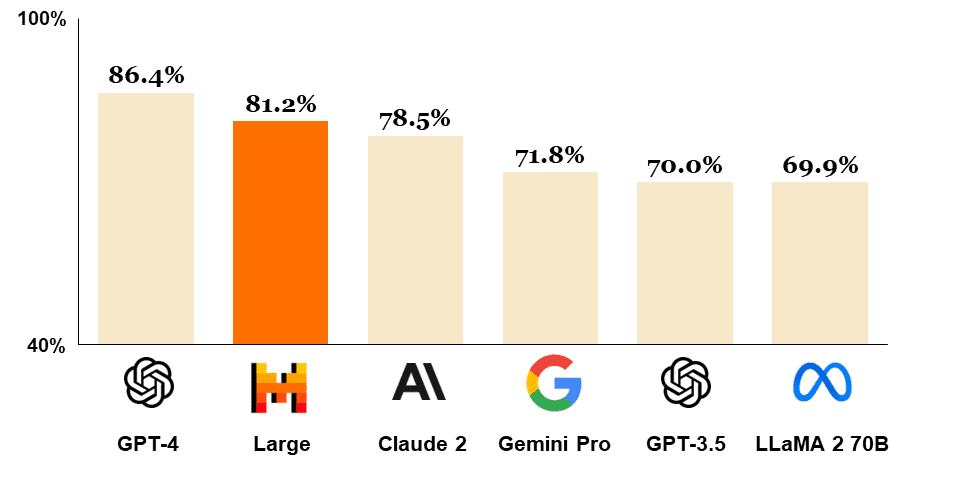

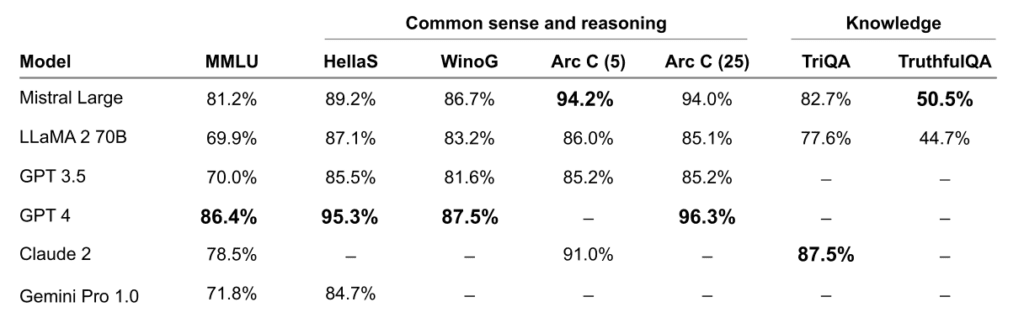

Mistral Large vs. GPT-4 and Claude 2: A Comparative Analysis

While direct comparisons remain challenging due to the dynamic nature of AI benchmarks, Mistral AI’s claims of ranking second to GPT-4 highlight its potential. Despite the possibility of selective benchmarking, the interest in Mistral Large’s real-world performance is palpable, with ongoing tests expected to shed further light on its capabilities.

Mistral Large introduces a range of new features and advantages:

- It boasts native fluency in English, French, Spanish, German, and Italian, demonstrating a deep understanding of both grammar and cultural nuances.

- The model’s context window of 32K tokens ensures accurate information retrieval from extensive documents.

- Its ability to follow instructions precisely allows developers to create specific moderation policies. This feature was instrumental in establishing system-level moderation for Le Chat.

- Mistral Large’s inherent function-calling capability, coupled with a constrained output mode as seen on La Plateforme, facilitates the development of applications and the modernization of technology stacks on a grand scale.

Le Chat: A New Era of Chat Assistants

Le Chat, Mistral AI’s latest offering, represents a significant advancement in chat assistant technology. Available for public beta testing, it showcases Mistral AI’s commitment to refining AI interactions. With plans for a paid enterprise version, Le Chat is set to redefine customer engagement through AI, bolstered by its inability to access the web, ensuring focused and concise responses.

Strengthening Ties: Mistral AI’s Partnership with Microsoft

In a strategic move, Mistral AI announces a partnership with Microsoft, leveraging Azure’s extensive customer base to distribute its models. This collaboration not only enhances Mistral AI’s market presence but also signifies Microsoft’s inclusive approach towards AI development, fostering a diverse ecosystem within Azure’s model catalog.

The Path Forward: Innovation and Collaboration

Mistral AI’s journey is a testament to the transformative potential of artificial intelligence. Through strategic partnerships, innovative product launches, and a focus on competitive pricing, Mistral AI is not just challenging the status quo; it’s redefining it. As the company continues to evolve, its impact on the AI landscape and beyond remains a compelling narrative of innovation, ambition, and the relentless pursuit of excellence.

How to Run Mistral Large Locally?

Currently mistral large is not available to download or use locally. You will have to use the Chat or API to use it.

What is Mistral Large and how does it compare to other AI models like GPT-4?

Mistral Large is a new flagship large language model developed by Paris-based AI startup Mistral AI. It’s designed to compete with top-tier models such as GPT-4 and Claude 2, offering native fluency in multiple languages, a large context window, and precision in instruction-following for developing moderation policies and function calling capabilities.

What languages does Mistral Large support?

Mistral Large supports English, French, Spanish, German, and Italian, with a nuanced understanding of grammar and cultural contexts in each language.

What is the context window size of Mistral Large, and why does it matter?

The context window size of Mistral Large is 32K tokens, which is significant because it allows for precise information recall from large documents, enhancing the model’s ability to understand and generate more contextually relevant responses.

Can Mistral Large be used to develop applications?

Yes, Mistral Large’s inherent function-calling capability, especially when combined with a constrained output mode, enables developers to use it for application development and technology stack modernization at scale.

What is Le Chat, and how does it relate to Mistral Large?

Le Chat is a new chat assistant service launched by Mistral AI that utilizes the Mistral Large model among others. It is designed to provide users with a beta experience of interacting with AI in a chat format, offering different models for varied interaction styles.

Is there a cost to using Mistral Large?

Yes, Mistral Large is offered through a paid API with usage-based pricing, which is currently set at $8 per million of input tokens and $24 per million of output tokens.

What makes Mistral AI’s approach to AI development unique?

Mistral AI initially positioned itself with an open-source focus for its first model, including access to model weights. However, for its larger models like Mistral Large, the company has shifted towards a business model more akin to OpenAI’s, offering its AI services through a paid API.

How does the pricing of Mistral Large compare to GPT-4?

Mistral Large’s pricing is significantly cheaper than GPT-4, with Mistral Large costing 5x to 7.5x less for both input and output tokens at the 32K-token context window size.

Can I download Mistral Large for local use?

Currently, Mistral Large is not available for download or local use. Users are required to interact with the model through the provided Chat service or API.

Why can’t I download Mistral Large for offline use?

Mistral Large’s deployment strategy focuses on cloud-based services to ensure the model’s updates, security, and scalability can be managed effectively. This approach supports providing users with the most current and optimized version of the model without the complexities of local installations.

How can I access Mistral Large if it’s not available for local download?

Access to Mistral Large is facilitated through its Chat service, Le Chat, or directly via a paid API. These online platforms allow users to leverage the capabilities of Mistral Large for various applications.